Diifusion-Conditional

这是我学习huggingface上的diffusion course时记录的一些笔记 ,希望能对你有所帮助😊

Introduction

Unconditional models don’t give much control over what is generated. We can train a conditional model that takes additional inputs to help steer the generation process, but what if we already have a trained unconditional model we’d like to use? Enter guidance, a process by which the model predictions at each step in the generation process are evaluated against some guidance function and modified such that the final generated image is more to our liking

There are a number of ways to pass in this conditioning information, such as

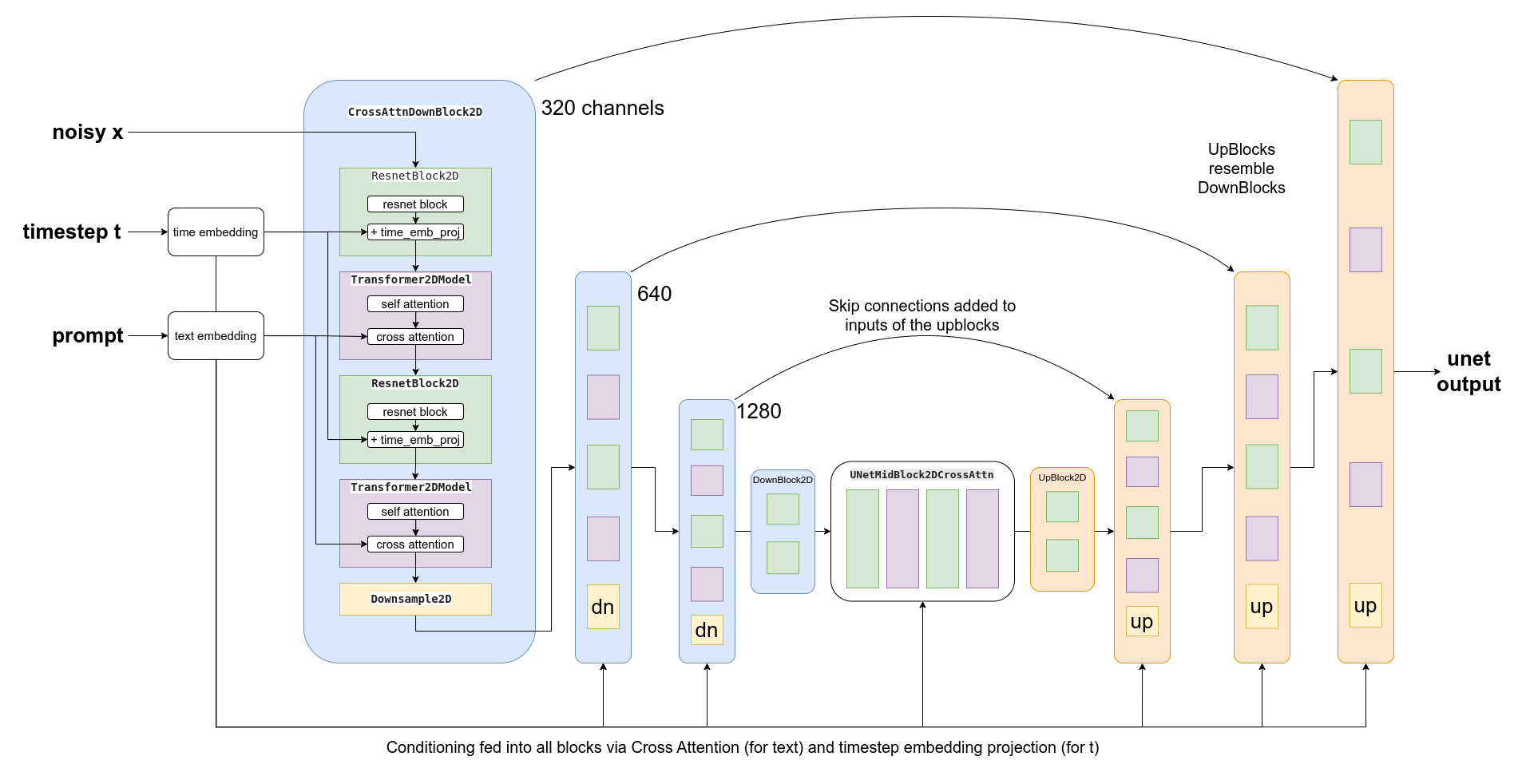

- Feeding it in as additional channels in the input to the UNet. This is often used when the conditioning information is the same shape as the image, such as a segmentation mask, a depth map or a blurry version of the image (in the case of a restoration/superresolution model). It does work for other types of conditioning too. For example, in the notebook, the class label is mapped to an embedding and then expanded to be the same width and height as the input image so that it can be fed in as additional channels.

- Creating an embedding and then projecting it down to a size that matches the number of channels at the output of one or more internal layers of the UNet, and then adding it to those outputs. This is how the timestep conditioning is handled, for example. The output of each Resnet block has a projected timestep embedding added to it. This is useful when you have a vector such as a CLIP image embedding as your conditioning information. A notable example is the ‘Image Variations’ version of Stable Diffusion which does exactly this.

- Adding cross-attention layers that can ‘attend’ to a sequence passed in as conditioning. This is most useful when the conditioning is in the form of some text - the text is mapped to a sequence of embeddings using a transformer model, and then cross-attention layers in the UNet are used to incorporate this information into the denoising path. We’ll see this in action in Unit 3 as we examine how Stable Diffusion handles text conditioning.

也就是我们所说的 Conditional Generation

Guidance

For example, say we wanted to bias the generated images to be a specific color. How would we go about that? Enter guidance, a technique for adding additional control to the sampling process.

Step one is to create our conditioning function: some measure (loss) which we’d like to minimize. Here’s one for the color example, which compares the pixels of an image to a target color (by default a sort of light teal) and returns the average error:

Color

1 | |

Next, we’ll make a modified version of the sampling loop where, at each step, we do the following:

- Create a new version of x that has

requires_grad = True - Calculate the denoised version (x0)

- Feed the predicted x0 through our loss function

- Find the gradient of this loss function with respect to x

- Use this conditioning gradient to modify x before we step with the scheduler, hopefully pushing x in a direction that will lead to lower loss according to our guidance function

1 | |

CLIP Guidance

CLIP is a model created by OpenAI that allows us to compare images to text captions. This is extremely powerful, since it allows us to quantify how well an image matches a prompt. And since the process is differentiable 可微, we can use this as a loss function to guide our diffusion model!

We won’t go too much into the details here. The basic approach is as follows:

- Embed the text prompt to get a 512-dimensional CLIP embedding of the text

- For every step in the diffusion model process:

- Make several variants of the predicted denoised image (having multiple variations gives a cleaner loss signal)

- For each one, embed the image with CLIP and compare this embedding with the text embedding of the prompt (using a measure called ‘Great Circle Distance Squared’)

- Calculate the gradient of this loss with respect to the current noisy x and use this gradient to modify x before updating it with the scheduler.

1 | |

- If you check out some code for CLIP-guided diffusion in practice, you’ll see a more complex approach with a better class for picking random cutouts from the images and lots of additional tweaks to the loss function for better performance.

- Before text-conditioned diffusion models came along, this was the best text-to-image system there was! Our little toy version here has lots of room to improve, but it captures the core idea: thanks to guidance plus the amazing capabilities of CLIP, we can add text control to an unconditional diffusion model 🎨.

Class-Conditioned DM

As mentioned in the introduction to this unit, this is just one of many ways we could add additional conditioning information to a diffusion model, and has been chosen for its relative simplicity.

Creating a Class-Conditioned UNet

The way we’ll feed in the class conditioning is as follows:

- Create a standard

UNet2DModelwith some additional input channels - Map the class label to a learned vector of shape

(class_emb_size)via an embedding layer - Concatenate this information as extra channels for the internal UNet input with

net_input = torch.cat((x, class_cond), 1) - Feed this

net_input(which has (class_emb_size+1) channels in total) into the UNet to get the final prediction

In this example I’ve set the class_emb_size to 4, but this is completely arbitrary and you could explore having it size 1 (to see if it still works), size 10 (to match the number of classes), or replacing the learned nn.Embedding with a simple one-hot encoding of the class label directly.

This is what the implementation looks like:

1 | |

Training and Sampling

Where previously we’d do something like prediction = unet(x, t) we’ll now add the correct labels as a third argument (prediction = unet(x, t, y)) during training, and at inference we can pass whatever labels we want and if all goes well the model should generate images that match. y in this case is the labels of the MNIST digits, with values from 0 to 9.

The training loop is very similar to the example from Unit 1.

1 | |

Stable Diffusion 概览

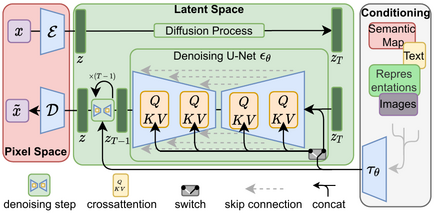

In this unit you will meet a powerful diffusion model called Stable Diffusion (SD) and explore what it can do. Stable Diffusion is a powerful text-conditioned latent diffusion model.

Latent Diffusion

As image size grows, so does the computational power required to work with those images. This is especially pronounced in an operation called self-attention, where the amount of operations grows quadratically with the number of inputs. A 128px square image has 4x as many pixels as a 64px square image, and so requires 16x (i.e. 42) the memory and compute in a self-attention layer. This is a problem for anyone who’d like to generate high-resolution images!

Latent diffusion helps to mitigate this issue by using a separate model called a Variational Auto-Encoder (VAE) to compress images to a smaller spatial dimension. The rationale behind this is that images tend to contain a large amount of redundant information - given enough training data, a VAE can hopefully learn to produce a much smaller representation of an input image and then reconstruct the image based on this small latent representation with a high degree of fidelity. The VAE used in SD takes in 3-channel images and produces a 4-channel latent representation with a reduction factor of 8 for each spatial dimension. That is, a 512px square input image will be compressed down to a 4x64x64 latent.

By applying the diffusion process on these latent representations rather than on full-resolution images, we can get many of the benefits that would come from using smaller images (lower memory usage, fewer layers needed in the UNet, faster generation times…) and still decode the result back to a high-resolution image once we’re ready to view the final result. This innovation dramatically lowers the cost to train and run these models.

Text Conditioning

In Unit 2 we showed how feeding additional information to the UNet allows us to have some additional control over the types of images generated. We call this conditioning. Given a noisy version of an image, the model is tasked with predicting the denoised version based on additional clues such as a class label or, in the case of Stable Diffusion, a text description of the image. At inference time, we can feed in the description of an image we’d like to see and some pure noise as a starting point, and the model does its best to ‘denoise’ the random input into something that matches the caption.

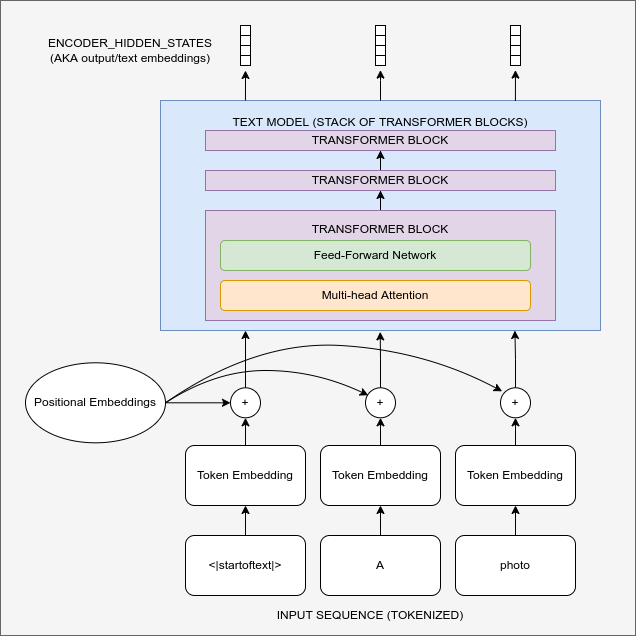

Diagram showing the text encoding process which transforms the input prompt into a set of text embeddings (the encoder_hidden_states) which can then be fed in as conditioning to the UNet.

For this to work, we need to create a numeric representation of the text that captures relevant information about what it describes. To do this, SD leverages a pre-trained transformer model based on something called CLIP.

CLIP’s text encoder was designed to process image captions into a form that could be used to compare images and text, so it is well suited to the task of creating useful representations from image descriptions. An input prompt is first tokenized (based on a large vocabulary where each word or sub-word is assigned a specific token) and then fed through the CLIP text encoder, producing a 768-dimensional (in the case of SD 1.X) or 1024-dimensional (SD 2.X) vector for each token. To keep things consistent prompts are always padded/truncated to be 77 tokens long, and so the final representation which we use as conditioning is a tensor of shape 77x1024 per prompt.

OK, so how do we actually feed this conditioning information into the UNet for it to use as it makes predictions? The answer is something called ==cross-attention==. Scattered throughout the UNet are cross-attention layers. Each spatial location in the UNet can ‘attend’ to different tokens in the text conditioning, bringing in relevant information from the prompt. The diagram above shows how this text conditioning (as well as timestep-based conditioning) is fed in at different points. As you can see, at every level the UNet has ample opportunity to make use of this conditioning!

Classifier-free Guidance

研究发现,即便我们竭尽全力优化文本条件的效果,模型在预测时仍倾向于主要依赖含噪输入图像而非提示词。某种程度上这可以理解——许多图像说明文字与其对应图片关联性较弱,因此模型学会了不过度依赖文字描述!但在生成新图像时,这种行为就不可取了——如果模型不遵循提示词,我们可能得到与描述完全无关的图像。

To fix this, we use a trick called Classifier-Free Guidance (CGF). During training, text conditioning is sometimes kept blank, forcing the model to learn to denoise images with no text information whatsoever (unconditional generation). Then at inference time, we make two separate predictions: one with the text prompt as conditioning and one without. We can then use the difference between these two predictions to create a final combined prediction that pushes even further in the direction indicated by the text-conditioned prediction according to some scaling factor (the guidance scale), hopefully resulting in an image that better matches the prompt. The image above shows the outputs for a prompt at different guidance scales - as you can see, higher values result in images that better match the description.

- 类似 dropout ,然后通过一个系数来控制guidance的强度